Upload Large Files to Azure Blob Storage

What's the large deal about file uploads? Well, the large deal is that information technology is a catchy performance. Implement file uploads in the wrong way, and you may end up with memory leaks, server slowdowns, out-of-memory errors, and worst of all unhappy users.

With Azure Blob Storage, at that place multiple unlike ways to implement file uploads. But if you lot desire to let your users upload large files you will almost certainly want to exercise it using streams. You'll find a lot of file upload examples out there that use what I telephone call the "minor file" methods, such as IFormFile, or using a byte array, a memory stream buffer, etc. These are fine for pocket-sized files, but I wouldn't recommend them for file sizes over 2MB. For larger file size situations, nosotros need to be much more than careful about how we procedure the file.

What NOT to do

Here are some of the Don'ts for .NET MVC for uploading big files to Azure Blob Storage

DON'T do it if you don't have to

You lot may exist able to utilize customer-side direct uploads if your architecture supports generating SAS (Shared Access Signature) upload Uris, and if you lot don't need to procedure the upload through your API. Handling large file uploads is complex and before tackling it, you should meet if you can offload that functionality to Azure Blob Storage entirely.

DON'T use IFormFile for large files

If you let MVC try to bind to an IFormFile, it will try to spool the entire file into memory. Which is exactly what we don't want to practise with large files.

DON'T model demark at all, in fact

MVC is very skilful at model binding from the web request. But when it comes to files, any sort of model binding will attempt to…you guessed it, read the entire file into retentivity. This is slow and it is wasteful if all we desire to do is forward the data right on to Azure Hulk Storage.

DON'T utilise any memory streams

This one should be kind of obvious, because what does a memory stream do? Yes, read the file into memory. For the same reasons as above, we don't want to do this.

DON'T use a byte array either

Yeah, same reason. Your byte array volition work fine for small files or light loading, but how long will you accept to wait to put that large file into that byte array? And if there are multiple files? Merely don't do information technology, at that place is a meliorate way.

So what are the DOs?

There is one instance in Microsoft's documentation that covers this topic very well for .NET MVC, and information technology is hither, in the final department about big files. In fact, if you are reading this article I highly recommend you read that entire certificate and the related instance because information technology covers the large file vs small file differences and has a lot of great information. And just get alee and download the whole example, because information technology has some of the pieces we need. At the time of this article, the latest version of the sample code bachelor is for .Cyberspace Cadre 3.0 but the pieces nosotros need will work simply fine with .Internet 5.

The other piece we demand is getting the file to Azure Blob Storage during the upload process. To do that, we are going to use several of the helpers and guidance from the MVC example on file uploads. Here are the important parts.

Exercise use a multipart form-information request

Yous'll see this in the file upload example. Multipart (multipart/course-data) requests are a special type of asking designed for sending streams, that can also back up sending multiple files or pieces of data. I think the explanation in the swagger documentation is besides actually helpful to understand this type of request.

The multipart asking (which can really be for a unmarried file) tin exist read with a MultipartReader that does Not need to spool the trunk of the request into retentivity. By using the multipart form-data request you can also back up sending additional data through the request.

Information technology is of import to note that although it has "multi-part" in the name, the multipart request does not mean that a single file will be sent in parts. It is not the same every bit file "chunking", although the proper name sounds similar. Chunking files is a separate technique for file uploads – and if you lot need some features such as the ability to suspension and restart or retry partial uploads, chunking may be the style yous demand to go.

Exercise prevent MVC from model-bounden the request

The example linked above has an aspect class that works perfectly for this: DisableFormValueModelBindingAttribute.cs. With it, we tin can disable the model binding on the Controller Activity that we want to utilize.

Practice increase or disable the asking size limitation

This depends on your requirements. You can set the size to something reasonable depending on the file sizes you lot want to allow. If you become larger than 256MB (the current max for single block upload for blob storage), you may need to do the streaming setup described here and Too clamper the files beyond blobs. Be sure to read the most current documentation to make sure your file sizes are supported with the method you choose.

/// <summary> /// Upload an document using our streaming method /// </summary> /// <returns>A collection of document models</returns> [DisableFormValueModelBinding] [ProducesResponseType(typeof(List<DocumentModel>), 200)] [DisableRequestSizeLimit] [HttpPost("streamupload")] public async Chore<IActionResult> UploadDocumentStream() ... Do process the boundaries of the request and send the stream to Azure Blob Storage

Once again, this comes mostly from Microsoft's case, with some special processing to re-create the stream of the request body for a single file to Azure Blob Storage. The file content type can be read without touching the stream, along with the filename. Only remember, neither of these can always be trusted. You should encode the filename and if you really desire to prevent unauthorized types, y'all could go even further past adding some checking to read the first few bytes of the stream and verify the blazon.

var sectionFileName = contentDisposition.FileName.Value; // use an encoded filename in case in that location is annihilation weird var encodedFileName = WebUtility.HtmlEncode(Path.GetFileName(sectionFileName)); // at present make it unique var uniqueFileName = $"{Guid.NewGuid()}_{encodedFileName}"; // read the section filename to get the content type var fileContentType = MimeTypeHelper.GetMimeType(sectionFileName); // check the mime type confronting our list of allowed types var enumerable = allowedTypes.ToList(); if (!enumerable.Contains(fileContentType.ToLower())) { return new ResultModel<List<DocumentModel>>("fileType", "File blazon not immune: " + fileContentType); } Practice await at the concluding position of the stream to become the file size

If you lot want to get or save the filesize, you tin can bank check the position of the stream after uploading it to blob storage. Do this instead of trying to go the length of the stream beforehand.

DO remove any signing central from the Uri i if you are preventing direct downloads

The Uri that is generated equally part of the hulk volition include an admission token at the end. If y'all don't want to allow your users have direct hulk admission, you lot can trim this part off.

// fob to get the size without reading the stream in memory var size = section.Body.Position; // check size limit in case somehow a larger file got through. we can't do it until subsequently the upload because we don't desire to put the stream in memory if (maxBytes < size) { await blobClient.DeleteIfExistsAsync(); render new ResultModel<List<DocumentModel>>("fileSize", "File too big: " + encodedFileName); } var doc = new DocumentModel() { FileName = encodedFileName, MimeType = fileContentType, FileSize = size, // Do NOT include Uri query since it has the SAS credentials; This will return the URL without the querystring. // UrlDecode to convert %2F into "/" since Azure Storage returns it encoded. This prevents the binder from beingness included in the filename. Url = WebUtility.UrlDecode(blobClient.Uri.GetLeftPart(UriPartial.Path)) }; DO use a stream upload method to blob storage

In that location are multiple upload methods available, simply make sure you choose one that has an input of a Stream, and use the section.Body stream to ship the upload.

var blobClient = blobContainerClient.GetBlobClient(uniqueFileName); // utilize a CloudBlockBlob because both BlobBlockClient and BlobClient buffer into memory for uploads CloudBlockBlob blob = new CloudBlockBlob(blobClient.Uri); look blob.UploadFromStreamAsync(section.Torso); // set the type after the upload, otherwise will become an fault that blob does non exist await blobClient.SetHttpHeadersAsync(new BlobHttpHeaders { ContentType = fileContentType }); Practise functioning-profile your results

This may be the nearly important instruction. After y'all've written your code, run information technology in Release mode using the Visual Studio Operation Profiling tools. Compare your profiling results to that of a known retention-eating method, such every bit an IFormFile. Beware that different versions of the Azure Hulk Storage library may perform differently. And unlike implementations may perform differently as well! Here were some of my results.

To do this simple profiling, I used PostMan to upload multiple files of effectually 20MB in several requests. By using a drove, or by opening multiple tabs, y'all tin can submit multiple requests at a time to see how the retention of the application is consumed.

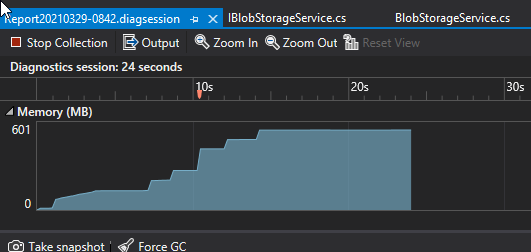

Starting time, using an IFormFile. You can see the memory usage increases rapidly for each asking using this method.

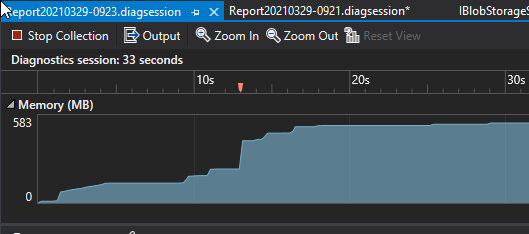

Next, using the latest version (v12) of the Azure Blob Storage libraries and a Stream upload method. Notice that information technology's not much meliorate than IFormFile! Although BlobStorageClient is the latest mode to interact with hulk storage, when I look at the memory snapshots of this performance information technology has internal buffers (at least, at the time of this writing) that cause it to not perform likewise well when used in this way.

var blobClient = blobContainerClient.GetBlobClient(uniqueFileName); await blobClient.UploadAsync(section.Trunk);

Only, using almost identical lawmaking and the previous library version that uses CloudBlockBlob instead of BlobClient, we can see a much meliorate retentiveness operation. The same file uploads result in a small increase (due to resource consumption that eventually goes back downward with garbage collection), only nothing near the ~600MB consumption similar higher up. I'm sure whatever retention issues exist with the latest libraries will be resolved somewhen, merely for now, I will use this method.

// apply a CloudBlockBlob because both BlobBlockClient and BlobClient buffer into retentiveness for uploads CloudBlockBlob blob = new CloudBlockBlob(blobClient.Uri); await blob.UploadFromStreamAsync(section.Body);

For your reference, here is a version of the upload service methods from that final profiling result:

/// <summary> /// Upload multipart content from a request body /// </summary> /// <param name="requestBody">body stream from the request</param> /// <param name="contentType">content blazon from the request</param> /// <returns></returns> public async Task<ResultModel<List<DocumentModel>>> UploadMultipartDocumentRequest(Stream requestBody, cord contentType) { // configuration values hardcoded here for testing var bytes = 104857600; var types = new List<string>{ "application/pdf", "epitome/jpeg", "prototype/png"}; var docs = await this.UploadMultipartContent(requestBody, contentType, types, bytes); if (docs.Success) { foreach (var md in docs.Upshot) { // hither we could save the document information to a database for tracking if (doc?.Url != null) { Debug.WriteLine($"Certificate saved: {doc.Url}"); } } } return docs; } /// <summary> /// Upload multipart content from a request torso /// based on microsoft case https://github.com/dotnet/AspNetCore.Docs/tree/master/aspnetcore/mvc/models/file-uploads/samples/ /// and large file streaming instance https://docs.microsoft.com/en-us/aspnet/cadre/mvc/models/file-uploads?view=aspnetcore-5.0#upload-large-files-with-streaming /// tin can accept multiple files in multipart stream /// </summary> /// <param proper noun="requestBody">the stream from the request body</param> /// <param name="contentType">content type from the asking</param> /// <param proper noun="allowedTypes">list of immune file types</param> /// <param name="maxBytes">max bytes allowed</param> /// <returns>a collection of certificate models</returns> public async Task<ResultModel<List<DocumentModel>>> UploadMultipartContent(Stream requestBody, cord contentType, Listing<cord> allowedTypes, int maxBytes) { // Cheque if HttpRequest (Grade Data) is a Multipart Content Blazon if (!IsMultipartContentType(contentType)) { render new ResultModel<List<DocumentModel>>("requestType", $"Expected a multipart asking, but got {contentType}"); } FormOptions defaultFormOptions = new FormOptions(); // Create a Collection of KeyValue Pairs. var formAccumulator = new KeyValueAccumulator(); // Make up one's mind the Multipart Purlieus. var boundary = GetBoundary(MediaTypeHeaderValue.Parse(contentType), defaultFormOptions.MultipartBoundaryLengthLimit); var reader = new MultipartReader(purlieus, requestBody); var department = await reader.ReadNextSectionAsync(); List<DocumentModel> docList = new Listing<DocumentModel>(); var blobContainerClient = GetBlobContainerClient(); // Loop through each 'Section', starting with the current 'Section'. while (section != null) { // Check if the electric current 'Section' has a ContentDispositionHeader. var hasContentDispositionHeader = ContentDispositionHeaderValue.TryParse(section.ContentDisposition, out ContentDispositionHeaderValue contentDisposition); if (hasContentDispositionHeader) { if (HasFileContentDisposition(contentDisposition)) { attempt { var sectionFileName = contentDisposition.FileName.Value; // utilise an encoded filename in instance there is annihilation weird var encodedFileName = WebUtility.HtmlEncode(Path.GetFileName(sectionFileName)); // at present make it unique var uniqueFileName = $"{Guid.NewGuid()}_{encodedFileName}"; // read the section filename to get the content type var fileContentType = MimeTypeHelper.GetMimeType(sectionFileName); // check the mime type against our list of allowed types var enumerable = allowedTypes.ToList(); if (!enumerable.Contains(fileContentType.ToLower())) { return new ResultModel<List<DocumentModel>>("fileType", "File type not allowed: " + fileContentType); } var blobClient = blobContainerClient.GetBlobClient(uniqueFileName); // use a CloudBlockBlob because both BlobBlockClient and BlobClient buffer into memory for uploads CloudBlockBlob blob = new CloudBlockBlob(blobClient.Uri); await blob.UploadFromStreamAsync(department.Body); // set the type after the upload, otherwise will get an mistake that blob does non exist wait blobClient.SetHttpHeadersAsync(new BlobHttpHeaders { ContentType = fileContentType }); // play tricks to get the size without reading the stream in memory var size = section.Body.Position; // cheque size limit in example somehow a larger file got through. nosotros can't do it until after the upload because we don't want to put the stream in memory if (maxBytes < size) { await blobClient.DeleteIfExistsAsync(); return new ResultModel<List<DocumentModel>>("fileSize", "File besides big: " + encodedFileName); } var dr. = new DocumentModel() { FileName = encodedFileName, MimeType = fileContentType, FileSize = size, // Practise Non include Uri query since it has the SAS credentials; This will render the URL without the querystring. // UrlDecode to convert %2F into "/" since Azure Storage returns information technology encoded. This prevents the binder from beingness included in the filename. Url = WebUtility.UrlDecode(blobClient.Uri.GetLeftPart(UriPartial.Path)) }; docList.Add together(doc); } catch (Exception eastward) { Panel.Write(e.Message); // could be specific azure mistake types to look for hither return new ResultModel<List<DocumentModel>>(nada, "Could not upload file: " + e.Message); } } else if (HasFormDataContentDisposition(contentDisposition)) { // if for some reason other form data is sent it would get processed here var key = HeaderUtilities.RemoveQuotes(contentDisposition.Proper name); var encoding = GetEncoding(section); using (var streamReader = new StreamReader(section.Torso, encoding, detectEncodingFromByteOrderMarks: true, bufferSize: 1024, leaveOpen: true)) { var value = await streamReader.ReadToEndAsync(); if (String.Equals(value, "undefined", StringComparison.OrdinalIgnoreCase)) { value = String.Empty; } formAccumulator.Suspend(fundamental.Value, value); if (formAccumulator.ValueCount > defaultFormOptions.ValueCountLimit) { return new ResultModel<List<DocumentModel>>(null, $"Form fundamental count limit {defaultFormOptions.ValueCountLimit} exceeded."); } } } } // Begin reading the next 'Section' inside the 'Trunk' of the Asking. section = await reader.ReadNextSectionAsync(); } return new ResultModel<Listing<DocumentModel>>(docList); } I hope you find this useful equally you tackle file upload operations of your ain.

Source: https://trailheadtechnology.com/dos-and-donts-for-streaming-file-uploads-to-azure-blob-storage-with-net-mvc/

0 Response to "Upload Large Files to Azure Blob Storage"

Publicar un comentario